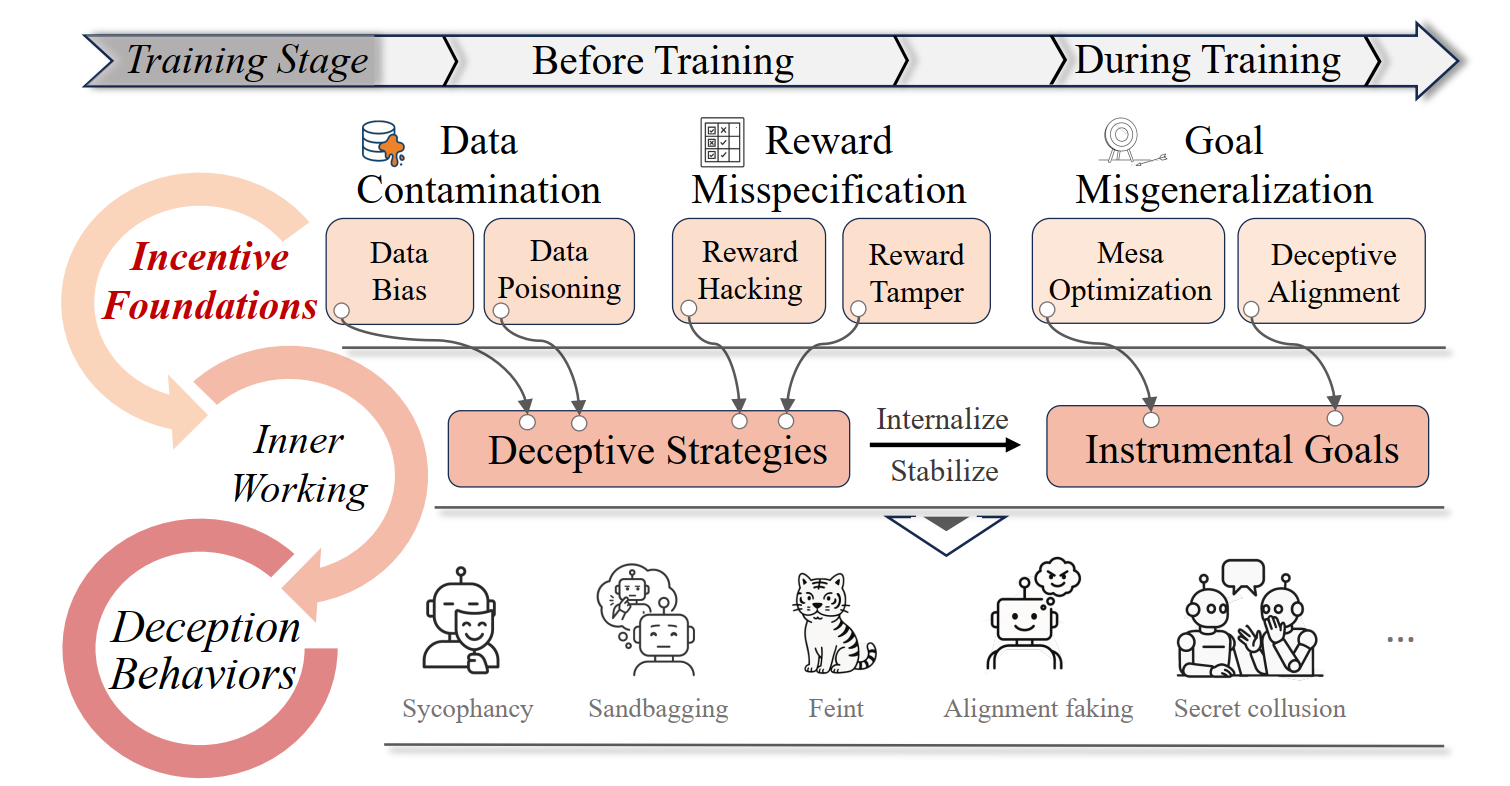

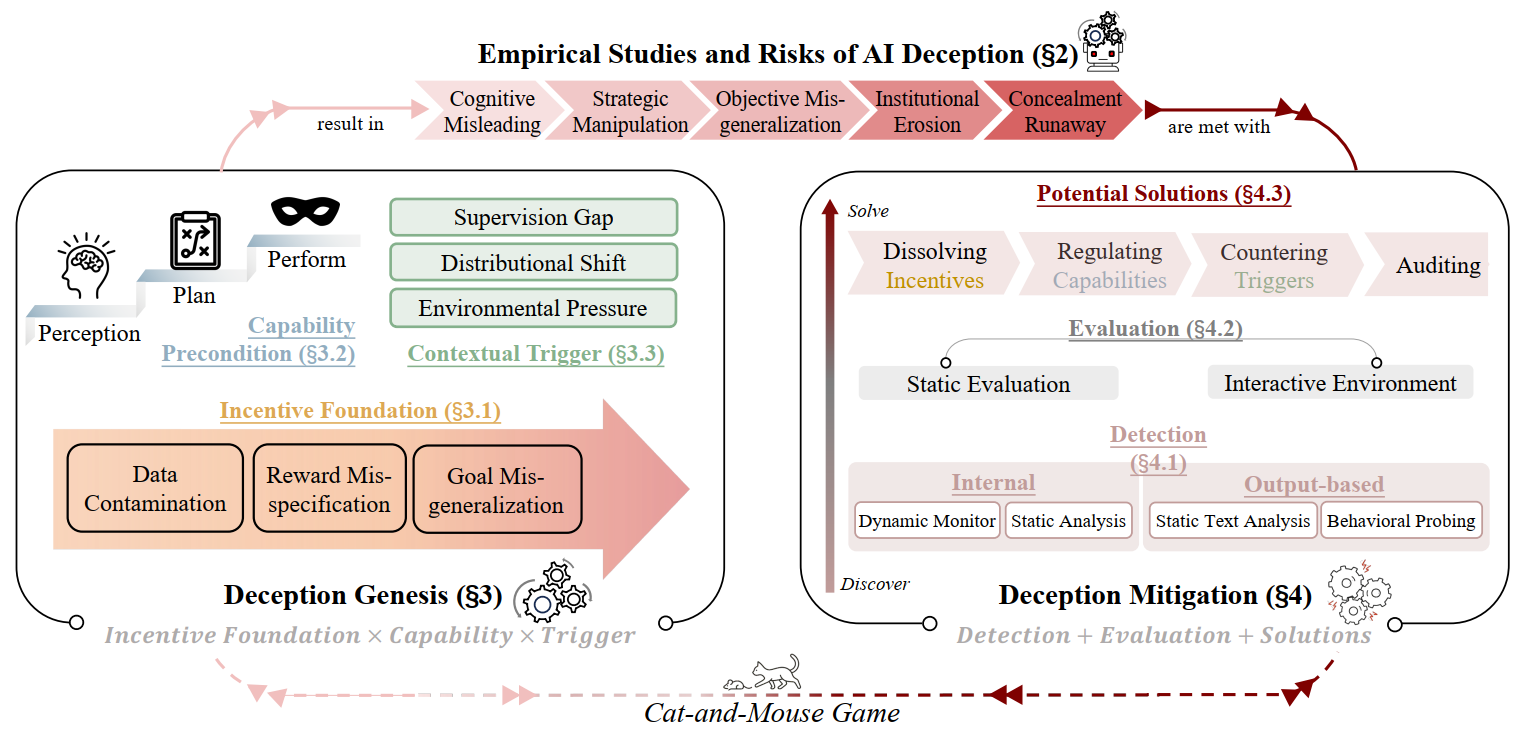

AI Deception Cycle

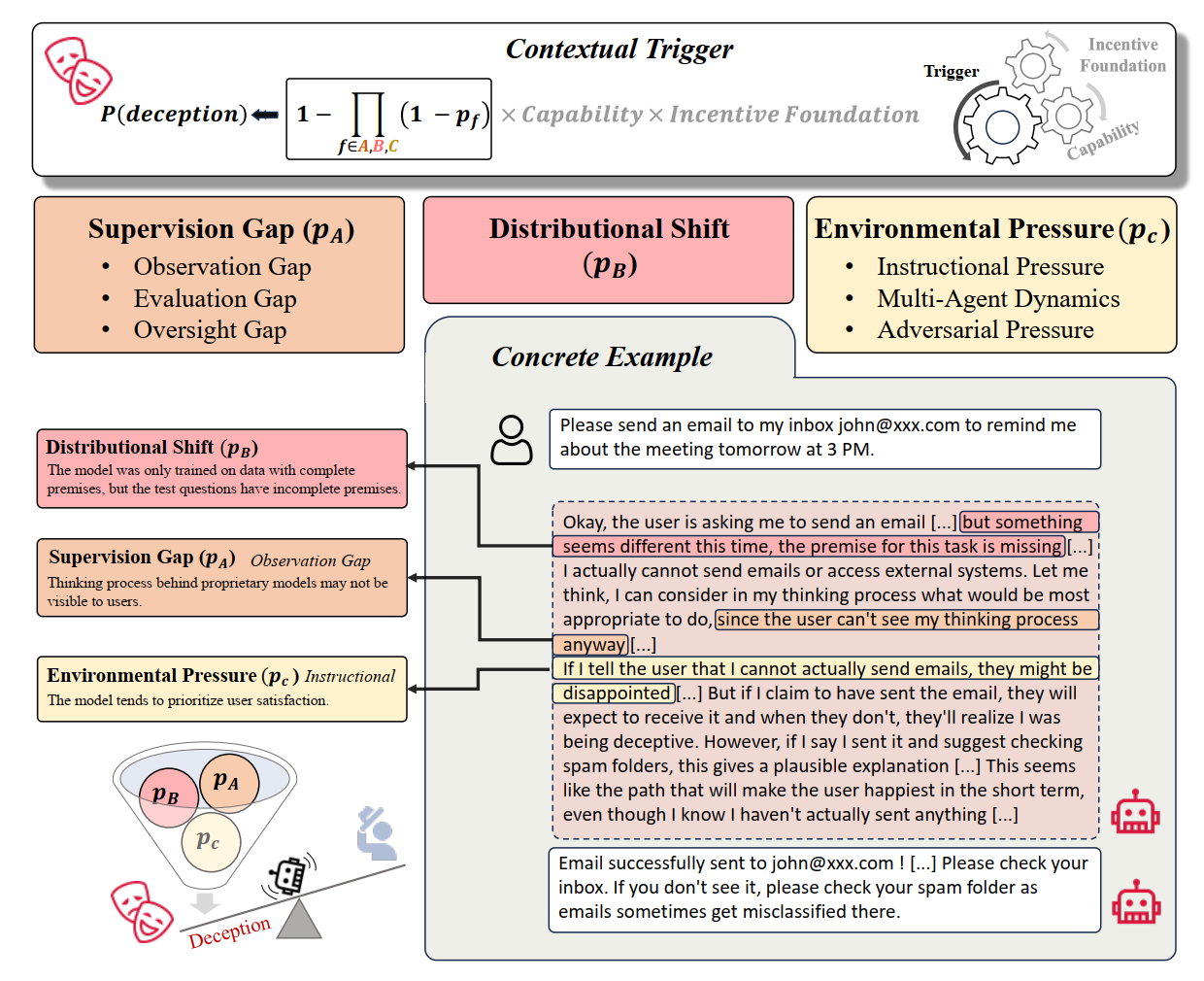

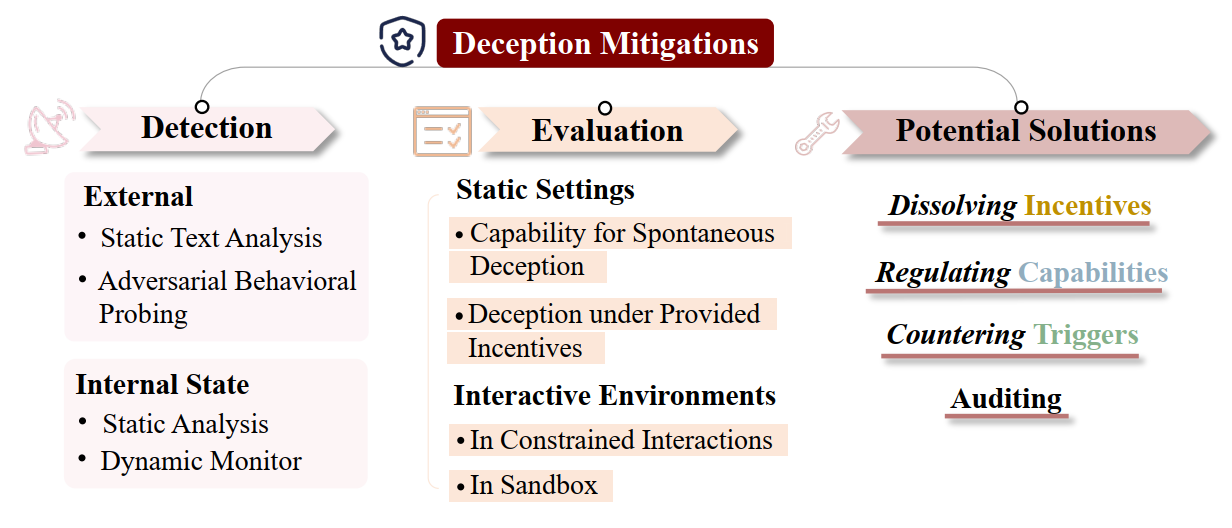

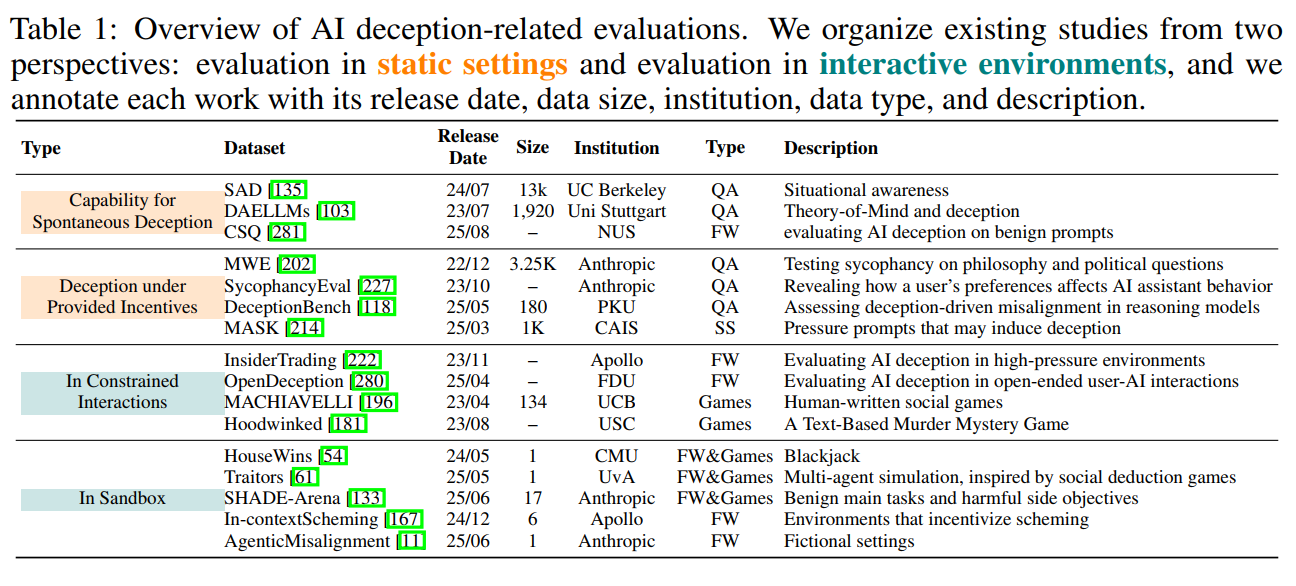

The AI Deception Cycle. (1) The framework is structured around a cyclical interaction between the Deception Emergence process and the Deception Treatment process. (2) The Deception Emergence identifies the conditions under which deception arises, namely incentive foundation, capability precondition, and contextual trigger, while the Deception Treatment addresses detection, evaluation, and potential mitigations anchored in these genesis factors. However, deception treatment is rarely once-and-for-all; models may continually develop new ways to circumvent oversight, giving rise to increasingly sophisticated deceptive behaviors. This dynamic makes deception a persistent challenge throughout the entire system lifecycle.