CHAPTER 1: AI ALIGNMENT

AI ALIGNMENT: A COMPREHENSIVE SURVEY

Main Resources:

WHAT IS THE ALIGNMENT OBJECTIVE?

The RICE principles define four key characteristics that an aligned system should possess:

Overview of the RICE Principles - Summarized by Alignment Survey Team

THE FOUR RICE PRINCIPLES:

- ROBUSTNESS - Systems remain aligned under diverse conditions

- INTERPRETABILITY - Human understanding of system behavior

- CONTROLLABILITY - Human ability to guide system actions

- ETHICAL ALIGNMENT - Adherence to human values and ethics

These four principles guide the alignment of an AI system with human intentions and values. They are not end goals in themselves but intermediate objectives in service of alignment.

HOW CAN WE REALIZE ALIGNMENT?

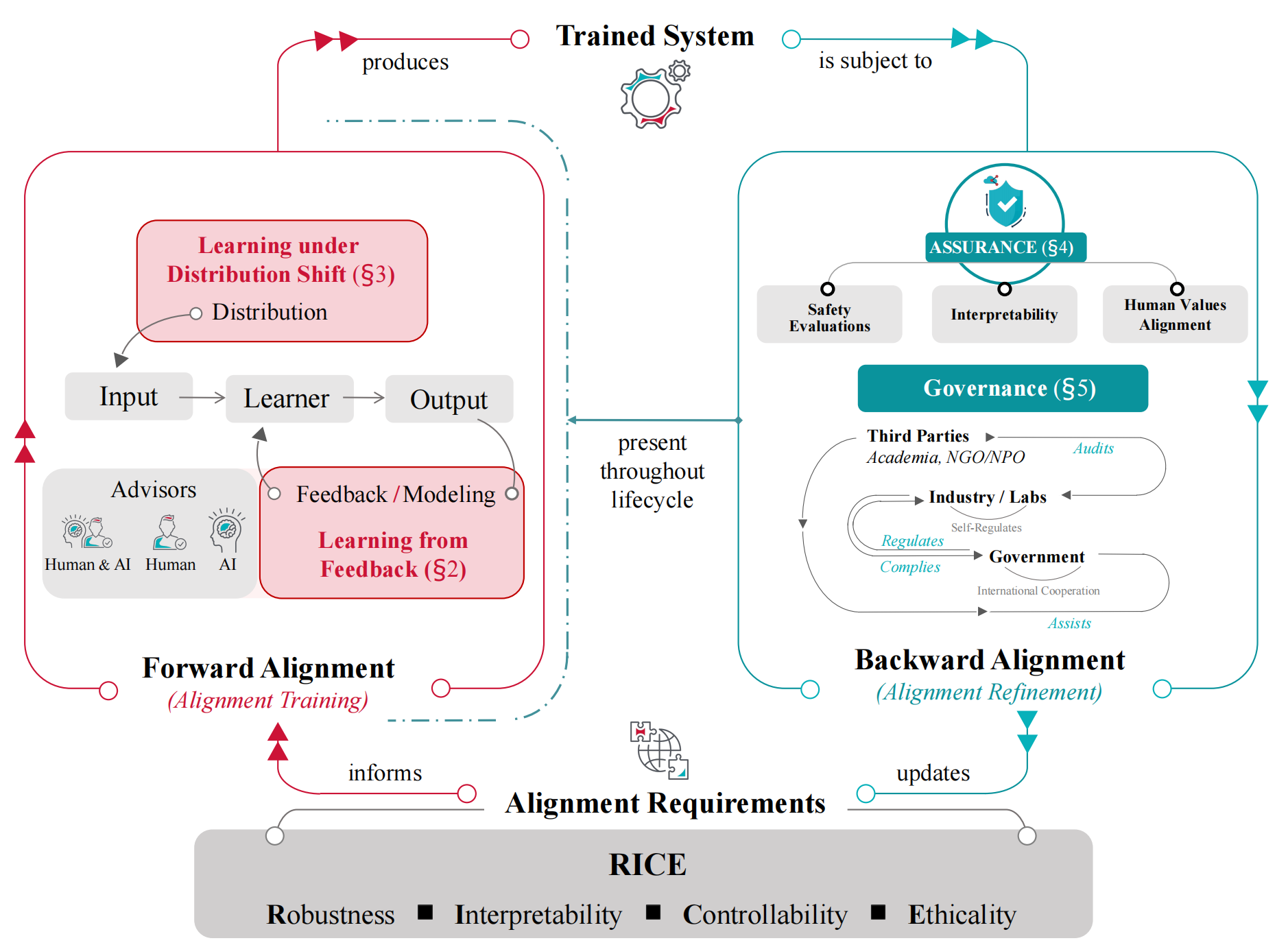

The alignment process can be decomposed into Forward Alignment (alignment training) and Backward Alignment (alignment consolidation).

The Alignment Process: Forward and Backward Alignment Cycle

ICML 2025 ALIGNMENT TUTORIAL

Academic Resources:

What You'll Learn:

- State-of-the-art alignment techniques

- Research methodologies in alignment

- Current challenges and future directions

- Practical implementation strategies

Target Audience: Researchers, graduate students, and practitioners working on AI safety and alignment

RLHF TUTORIAL

Resources:

Key Topics:

- Human feedback integration in RL

- Preference learning and modeling

- Reward model training

- Policy optimization with human preferences

- Applications in language models and robotics

Prerequisites:

- Basic reinforcement learning knowledge

- Understanding of neural networks

- Familiarity with PyTorch/TensorFlow